According to ISG’s 2023 Future Workplace study, 85% of enterprises believe that investment in generative AI technology in the next 24 months is important or critical. This means most of us can expect to be collaborating with AI in the near future. Much ink has been spilled on the burgeoning capabilities of different AI tools and how they can help us be more efficient and effective. Far less attention has been given to the capabilities we will need to work with AI.

The Human Challenge of Using Generative AI

The problem is: humans are equipped with a set of internal theories about how minds work. It’s called theory of mind and is essential for cooperation, collaboration and empathy. Basically, we take our own experiences of having internal desires, wants and thoughts, and project that onto other people to explain their behaviors. If I feel hungry, I reach for an apple and bite it. Ergo, if Sally reaches for an apple and bites it, she’s probably hungry. This system generally works well for human-to-human interactions. Most times humans come into conflict; the root is because their unspoken assumptions about the motivations of other people’s behaviors are wrong. Now, take that problem and multiply it by 1,000.

AI may seem very human. Studies have shown people tend to think of AI as more person-like and less tool-like. This means people apply the same beliefs about human mental processes to AI. But AI processes are not the same as human mental processes. ChatGPT can use immense amounts of computational power and training data to produce output that reads like it was produced by a person. However, the computation rules that produce the output are nothing like those our brains use. The risks posed by generative AI vary depending on the use case, but all share a core thread – a misapplied sense that generative AI functions the same way as a person.

Examples of AI Use Cases by Function

Customer-service agents: The emerging ability of generative AI to model empathy in conversations makes it likely we will soon see more complex customer-service issues being handled by AI agents. While this may be a successful way to reduce costs and improve the speed of issue resolution, the potential also exists this application will alienate people. That is because while generative AI can mimic empathy, it cannot feel empathy. Why does this matter? Because one of the three key components of empathy is the emotional aspect – when you physically feel along with the other person. When this is absent, people rightly feel the empathy being expressed is not genuine.

The extent to which this affects people’s interactions with AI will probably differ across contexts. People may be willing to accept feigned empathy in an AI customer-service agent, but not in a therapist. Important work remains to be done in determining when this generative AI capability will be appropriate and when it will not.

Augmenting knowledge work: AI has a powerful ability to generate new content. This is likely to lead to a boom in the use of AI to supplement a knowledge worker’s skills. However, enterprises need to be aware of significant risks. For example, a group of lawyers asked ChatGPT for precedents to help support their case, only to find several of those provided were fictional. The lawyers incorrectly assumed that ChatGPT, like a person, would try to find existing precedent and let them know if there wasn’t any. But this is not what ChatGPT does. It may prioritize creating realistic responses over truth. This example is only the tip of the iceberg in what will likely become a case book of cautionary tales.

In fact, my own job role, which currently focuses on helping businesses and IT work together more smoothly, will in the future likely involve helping smooth the inevitable workplace conflicts that will arise between AI and people. AI has an immense potential to help us, but only if we know how to help ourselves be good teammates.

Selecting the Right AI Partner

For an AI strategy to succeed, enterprises need to choose the right set of tools, in combination with the right human intelligence. In this context, cognitive psychologists and other experts can play a key role in helping smooth the human-AI interface, using their knowledge of the way people think and the way AI works to create a roadmap for overcoming these differences. It is essential that, when enterprises are selecting their AI partners, they choose not only for technical competence but also for partners who understand human psychology and the challenges the workforce is likely to face using AI. This will be at the crux of designing human-centered AI.

How to Build an AI Control Plane

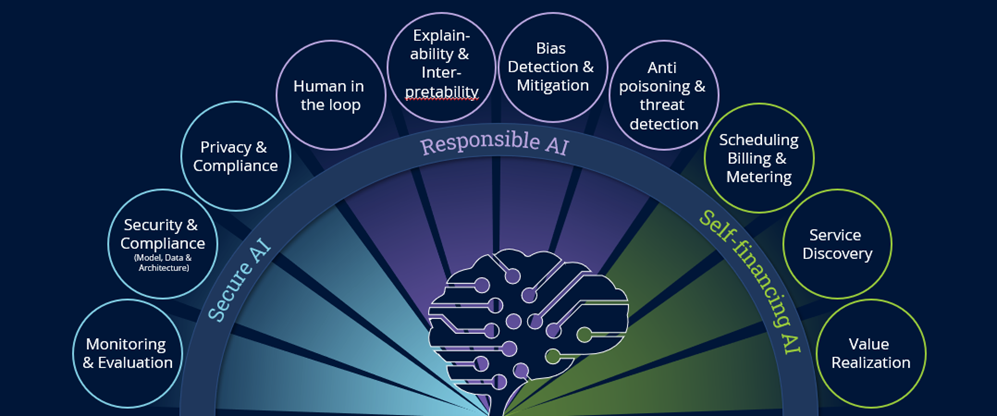

AI-powered tools and solutions will continue to crowd the market and force leaders to continuously reevaluate the best tools and services – and the right way to prepare human employees to deploy and use them. To be successful with AI design, acquisition and deployment, enterprises must develop a perspective that centers three essential questions:

- How do we prepare our workforce for responsible and optimized use of AI?

- How do we secure our use of AI?

- How do we design and deploy self-financing AI?

In times of constant change, it’s helpful to establish an AI control plane that will provide a consistent approach for adopting and maintaining new technology. Working with a qualified advisor to help establish these guiding principles can help you understand how to adapt, how to avoid unexpected surprises and costs, and how to prepare your workforce.

Figure 1 ISG's AI Control Plane

ISG advises on the use of AI in the workplace and also analyzes how satisfied employees are with workplace tech tools like AI and automation. Contact us to learn more.